[00:00:01] Speaker A: Our job as scientists and theorists is really often to unify these things and to say, you know, what is some coherent description where I can use, you know, all the language from all the different disciplines as we currently divide them up? Right. The disciplines aren't reflective of nature. They're, you know, historically accidents, basically.

[00:00:19] Speaker B: Do we have that cognitive ontology correct?

[00:00:22] Speaker A: No. I mean, there's almost pretty much no way that we're. Right.

[00:00:26] Speaker B: Is the simple way to say it that the goal is to build a brain? I mean, just like the title of the book.

[00:00:30] Speaker A: Yeah, absolutely.

[00:00:31] Speaker B: How has that goal?

[00:00:32] Speaker A: It's my shortest answer.

[00:00:33] Speaker B: Yeah, I love that answer.

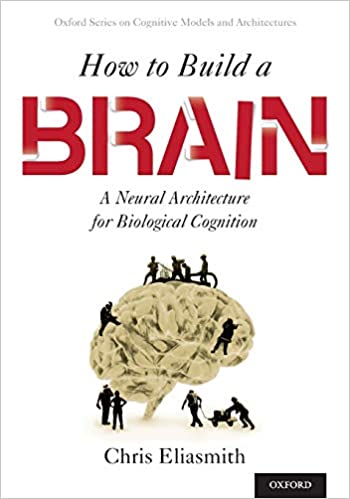

This is brain inspired semantics. How does meaning get attached to processes? Syntax? How is structure encoded and manipulated? Control? How is information flexibly controlled as needed in various situations? And learning and memory? How are learning and memory instantiated? Hey everyone, it's Paul. Those are the four questions that Chris Elias Smith spends his career at the University of Waterloo pondering and trying to answer in the form of Spaun, his attempt to build a human brain. If you've seen Chris's talks, you've seen the demonstrations of spaun receiving task instructions into its one eye, processing the required computations in its spiking neural networks and performing the task using its arm to write an answer. Chris writes about all of this in his book how to Build a Brain, which I recommend, and we talk about. The book summarizes his philosophical approach as well as how he builds Spaun. He's also the co founder and co CEO of Applied Brain Research, an AI applications company that spun out of his lab. I also got some guest questions today from Brad Imony, Steve Potter and Randy O'Reilly. So thanks to those folks, show notes are at BrainSpired Co podcast 90. If you find brain inspired valuable, consider supporting it for just a few bucks a month through Patreon. Thank you as always to the wonderful people who do that already and thank you for listening. All right, here's Chris.

All right, so I had Randy O'Reilly on a couple episodes ago and I started off by asking him this question that I'm going to ask you to begin with as well. It was essentially, how often do you allow yourself to feel a sense of awe with regard to how far you've come personally and just how much we know about brains and natural and artificial intelligence? Do you ever allow yourself to step back and just revere the amount that we've learned?

[00:02:59] Speaker A: It's funny. Yeah, it's definitely tempting to do that. And we hear about fantastic developments in AI things like GPT3 or really quite impressive. Extremely large models that are surprisingly coherent when you speak to them, and so on.

And we can see all the kinds of deep insights that people are getting in neuroscience and the new kinds of techniques that let you record from hundreds or thousands of neurons at the same time. Much of this is completely unprecedented and super exciting, but it never takes long for me to also quickly think of examples that just seem so far away from what we can do. Right. And the amount that we're missing. If we're looking at a thousand neurons, this is like 0.0000001% of what's going on inside a brain.

So, yeah, the awe of how far we've come is often quickly replaced with the awe of how far we have left to go.

[00:03:53] Speaker B: Do you feel that way in your own work with Spaun as well?

[00:03:56] Speaker A: I do, actually. I think, yeah. That's not an unfair way to characterize it. I look at Spaun and I can make it sound impressive. Six and a half million neurons. That's quite a few. 20 billion parameters. That's a lot of parameters. How did we figure out how to tweak them all such that they did anything at all, let alone something kind of interesting?

But very quickly, I also realized we've got 20ish brain areas and we definitely aren't fully modeling those. And out of a thousand or more brain areas, the model is 20,000 times smaller than the brain. 20,000 times smaller is a lot smaller. So, yeah, it's the same kind of experience that I can definitely make myself feel good if I focus on the right aspects of the work. But it doesn't usually take long for me to also just realize how sort of minuscule it is from the perspective of how far we'd like to go.

[00:04:49] Speaker B: I feel like this is a cruel joke that is being played on us that our reward systems can't just accept, can't every once in a while just. Just sit in awe of all of this stuff. Because we always have to think, what's the next thing? The more we know, the more we don't know. I mean, there's this. I don't know, it's a real conundrum. Well, we're going to talk about Spaun a lot, I hope, during this episode, as detailed in tons of your work. But most succinctly, I suppose, in your book how to Build a Brain, which is, what, eight years old now? Is that right?

[00:05:22] Speaker A: Yeah. Yep. Making me feel old.

[00:05:24] Speaker B: So this is. So it's a really nice book. And I actually just tore through it and I wasn't able to do the. I'm going to go through it again and do all the tutorials laid out in the book because. So you begin by laying out your philosophy and your approach and then, you know, it also details how to implement these networks on software that you guys have built and is freely available. And then you provide all these tutorials, which I'm going to go back through.

[00:05:49] Speaker A: Yeah, I will let you know that actually the tutorials have all been updated to the latest version of Nango. So you want to track those down online. The book is a little bit out of date in that regard.

[00:05:57] Speaker B: I was wondering how often that would be the case. Do you have another book in the offing or what?

[00:06:03] Speaker A: No, we basically have just replicated all of those tutorials in the new user interface and it's a much nicer package than it used to be. And it's much more closely connected to neuromorphic hardware, for instance, which is quite nice as well.

[00:06:15] Speaker B: Okay, good. We're going to talk about that as well. Let's start with your approach a little bit.

Why is it important to build large scale models of the brain?

[00:06:25] Speaker A: Right. I think we sort of touched on that with the very first question. Because the brain is very large and very complicated, and to expect explanations which are simple or uncomplicated is probably not super realistic. I think we have some fairly compact explanations of physical phenomena and people have often thought maybe that's a good model for how we'll understand biology as well. So we have equations that seem to apply to huge numbers of motions, be it planets or tiny little particles.

But that kind of simplicity is not something I think we should expect in biological explanations and biological systems. And so we really need to build models in order to express our explanations in an appropriate manner and test them, test them in the same way that we'd be testing any theory. So that's one important reason. It's just that we need big complicated models because it's a big complicated system we're trying to understand.

And then another one is because it really forces us to specify our understanding. So I like to quote Richard Feynman, as I do in the book, that which I cannot create, I do not understand. And so it seems to me that we need to build mathematical models as a way of expressing what our understanding is. And in some ways it's the only option, I think, when it comes to understanding something like the brain.

[00:07:45] Speaker B: But mostly what is still being worked on are really smaller scale models of particular functions Kind of in isolation. Right. So you might have a working memory model, and maybe you do it in a neural network, maybe you do it in a box and arrow kind of higher cognitive model. But then the large scale modeling has to bring all of this stuff together into a coherent whole. So, although I know that there's some balance in developing the model, in bringing in a new function and having to integrate it with the whole. So, I mean, is it important to have everything working together? Is that an important constraint?

[00:08:23] Speaker A: Yeah, I think that's a little bit more of an idiosyncrasy on my part, building models which have a lot of parts and putting them together. So there are models of similar sizes to Spaun, like GPT3, but it's focused on language. Right. That's all it does.

And in fact, it's even larger than spaun as far as the number of parameters go. But it is more specific functionally, like you're saying. I think the reason that in my work we tend to focus on this kind of integration problem is because part of my academic background is as a systems design engineer. And one of the things that we focused on there was understanding systems, breaking them down, and coming to realize that it's the interaction between system parts that often is where our understanding fails or our systems fall apart, or the real challenges arise. And so that's why it's important for me if we want to understand cognition, and I think cognition comes about because of the interaction of lots of different parts of the brain, then I want to build models that try to capture my understanding of that integration.

[00:09:24] Speaker B: We talk a lot, we have talked a lot on this podcast about levels, levels of analysis and understanding. And the classic, well known version of that is David Marr's levels. So you have the implementation level, which is the hardware and how it goes about doing its thing, the algorithmic and representational level, which are sort of the steps to perform the computation. And then you have this higher level computational level, which is what computation, what function is actually being performed. And you talk about that in the book as well. But in addition to that, you talk about levels of scale and you adhere to what you call descriptive pragmatism with respect to levels of scale. What does that mean and why is that important?

[00:10:10] Speaker A: Right. So yeah, that was definitely the philosopher coming out in me there, coming up with that funny sounding term, but it's very philosophical sounding.

[00:10:17] Speaker B: Yes, it is.

[00:10:18] Speaker A: And I was actually referring to something slightly different than Mars level. So in the philosophical literature where people are wondering about levels, they're Typically talking about levels in nature. So MAR was talking specifically about levels in cognition or the brain. But more broadly, if you think about levels in nature, there have been two prominent views. One basically is a reduction kind of view, which is not that dissimilar from the way most people interpret Marr, where you've got, you know, high level big phenomena and then you break it down into smaller parts. So you take, you know, wood and you break it down into its chemical components. You break the chemical components down into their atomic components and break those down and down and down. Right. And everything gets smaller. And you give explanations for how wood behaves in terms of whatever level you want to break it down to. So you're really focused on trying to implement this reductive relationship. Putnam and Oppenheimer had that kind of view. And then when people started thinking about psychology, in particular, Jerry Fodor being one prominent example, they came to realize that that kind of reduction doesn't seem to work very well. And so they came up with an anti reductive stance where they basically said that sciences like psychology stand independent of our ability to reduce, reduce them. Like I should be able to give psychological level explanations and you know, Standalone. Yeah, standalone, yeah. Whenever I try to reduce them, something falls apart. Right. That reductive relation just doesn't exist. What I'm trying to do in speaking to that is to say, like, that's essentially these are ontological views. You're trying to say that in nature there is a fact of the matter about how levels relate to one another. And I'm instead taking an epistemic view, meaning that it's really about what can we know? Or what is it useful? How is it useful for us to break up nature in order for us to know it well, that is generate good explanations. And so descriptive pragmatism is saying, well, the levels I'm picking out are really just descriptions. It's an expression via my knowledge. And it's one where I try to capture things like relations between parts and wholes. I try to talk about how lower level mechanisms give rise to higher level behaviors. I can try to quantify the relationships. I'll write down some math to say exactly what those relationships are. And ultimately it comes down to specifying descriptions and levels in service of an explanation of some kind. So I need to say these are the questions I'm trying to answer. And once I say that, then I'm like, okay, well here is my level that I'm going to talk about. So if I'm asking for a Molecular answer to some neuroscience question. And it's like, okay, these are the levels I need to speak about. I need to speak about molecules and ion channels and some receptors and all that good stuff. But if somebody's asking a question about reaction times, then, well, I might have another level of description. And ideally I interrelate these descriptions, of course, and that's what makes them kind of levels. But it's not an imposition in terms of saying, this is the way nature is carved, these are the joints of nature, and so on. It doesn't have nearly the same. I think it's basically unlikely that we'll ever discover what those are. So it's maybe a little bit more humble in that regard. It's really related to what we know and how we know things.

[00:13:17] Speaker B: It's unlikely that we'll ever come up with a real gritty corresponding mapping between levels, however many levels there are. Let's just say psychology and neuroscience, just as an example. Are we going to always just have separate vocabularies and descriptions for the phenomenon we're taught, the phenomenon we're talking about, or are there levels to be found in between that we will eventually have a new either vocabulary or change our current vocabulary to map onto concepts that we're continuing to build on?

[00:13:48] Speaker A: Yeah, exactly. And so I'm definitely in the latter camp where I believe that our job as scientists and theorists is really often to unify these things and to say, what is some coherent description where I can use all the language from all the different disciplines as we currently divide them up. Right. The disciplines aren't reflective of nature. They're historically accidents, basically. So we can borrow whatever language we want. Mathematics is really nice because it's often a way of being very specific and precise about what relationships you're talking about. And so, yeah, we want to tell that kind of story where we still have levels show up, but we're not going to expect them to align to our chosen disciplines like neuroscience and psychology. Not at all. And I actually think so. Because you brought up Mara, I just jump on that for a second.

A lot of people use Mara as a way of kind of supporting that reductive view where they say, oh, Marr had level one, two, and three, the ones that you specified, and they're independent. And that kind of independence then makes you think, oh, well, I have to have some reductive relationship between the two. If you read Mar, he doesn't think that. So he specifies these, again, as more of a practical approach. Like, if I'm going to Come to give a model or an understanding. Here's a useful way to start to give descriptions that are helpful. And if you read his 1980 book, the Vision Book and so on, it really seems like he's much more integrative than people give him credit for. And I've written a couple of papers on exactly that topic because I think it's kind of the right view really to be integrative more than reductive.

[00:15:15] Speaker B: It's interesting Tommaso Poggio, with whom David wrote these levels things originally and then he went on to elaborate them, but Poggio recently sort of updated his thoughts on the and added a few. Like, I think learning was a new level and evolution was a new level. Anyway, I don't know if you're familiar with that.

[00:15:36] Speaker A: So no, I haven't read the updated stuff.

[00:15:39] Speaker B: Okay, yeah, I'll point you to it maybe later. So then I won't ask you about it.

Let's talk about AI and intelligence in general then. Because in the book, and we're going to stick with a little philosophy here before we get down. I know you like this stuff. You like to fill out philosophical stuff. Yeah. You claim that it's very opening paragraphs. I think in the book that in the last 50 years or so the real advances that we've made are not necessarily technological or knowledge based, but instead about figuring out which questions are the right questions to ask. And that's another very philosophical approach because I think it's often the job of philosophy to figure out what is the right question. Right. So I don't know, could you elaborate on that a little bit? And I'm wondering also, are we asking the right questions now or are we asking better questions than the worst questions before?

[00:16:37] Speaker A: Yeah, this is an excellent question.

Right. I don't think we're probably ever going to be to the point where we're asking the right questions. I mean, it's always difficult to answer that insofar as we can ask questions which are so general they kind of have to be right. Like how does the brain work? I mean, that's a question and that's one we'll probably ask for a really, really long time. So maybe it's right in that sense because it will stay interesting.

But I think the more specific you make your question then the less you're sure if it's the right one. Right. It's something that you're looking for an answer to, but you don't know if that answer is going to lead you in the direction of answering your Higher level questions. And in the book I actually specify four questions and I'm still asking those. I'm sure I'll be asking them for the rest of my career.

I think they're better, as you were saying, than the ones that might have been asked before in some regards. So that they're organized differently than the way people have asked questions before. And I think usefully. And that's really the kind of thing that I meant in starting the book with emphasizing how we've really improved the questions we're asking. Even if a lot of the answers haven't sort of caught up in some ways I think they're always getting better. Answers are definitely always getting better. I think we've seen huge strides in recent years, in fact, largely because of computation and building big complicated models and all the kinds of things we were talking about earlier on. So yeah, I would say the questions are better. I wouldn't say that they're definitive and right, but they'll definitely keep me entertained for the next 30 years or more.

[00:18:10] Speaker B: I mean, one of the things that you lay out is our trajectory, our as in the human species, from the earliest AI, the gofi AI days of symbolic architectures on to connectionism and dynamicism. And you even mention in parentheses, basically a Bayesian approach as another sort of major approach. And what you desire to do, and I commend you for this, is have the best of all of those worlds sort of, and unify them essentially and say we can have a little bit of this and a little bit of that and really. But the purpose is, or the right way is to use the right things from these different approaches to build an integrated whole system. Which is what you've tried to do. Well, which is what you've done.

[00:18:57] Speaker A: Yeah, tried to do. I'll give you.

[00:19:02] Speaker B: Which is what you're continuing to do.

[00:19:04] Speaker A: Okay, fair enough.

[00:19:06] Speaker B: One of the things that it seems like you do is you take a function based approach to build this thing and constrain it by the biological details. So in that sense it's kind of a top down approach, right. Instead of like the mark room, sort of build all the details and turn it on and see what happens sort of thing. You really, you approach it from a functional aspect and then build it with all of the biological details as a constraint. Do you think that we have the right defined functions? Even something like working memory for instance, or attention and all of those fun words that have some psychological meaning? Maybe, maybe they do, maybe they don't. Do we have that cognitive ontology correct.

[00:19:51] Speaker A: No, I mean, there's almost pretty much no way that we're right at this moment, I think, but we're moving in the right direction. It's better than it used to be. We have a lot of ways of testing the system, and those tests are an attempt to figure out those functions. But really, at least they are operationalizing what we mean by those words. So by that I mean we can say something like working memory, and we can have some theoretical idea what that means. But what we do know is we've got a bunch of experiments we've done on animals and humans, which we call working memory experiments. And we think there's some core to them, but at least we can build a model that passes those tests or performs on those tests in the same way as the biological system. We have a reason to believe that we've got something right about the function. Right. So it doesn't mean that we have exactly the right individuation of what the relevant functions are in the brain. Michael Anderson's done some really nice work on commenting how complicated and weird this seems to be. Sometimes part of the brain that's for controlling your fingers also seems relevant for counting. So that's sort of not one that we might have hypothesized if we hadn't collected a whole bunch of data, tried to operationalize things, and then notice this weird overlap between brain areas that light up in these two cases. So, yeah, I definitely don't think we're done, but I do think that we have enough information such that they can constrain our models. Right, exactly the way that you're saying. I guess the maybe slight change I'd make to your characterization of what my lab does as top down is that it's really bidirectional. Right. So we have low level constraints and high level constraints. So high level constraints are function, low level constraints are biological information. And we try to make the two meet. Right. We've got all kinds of tools and techniques to try to make them meet, and then after making them meet, go and test them in both directions. So are we getting functions in the way that we wanted to? We can test the functions in ways that we didn't take into account when we first built the model. And we do exactly the same thing on the biological side. What kind of spike rates come out of these in this new functional example? And if we collect data from the animal, does it look the same? Right. So, yeah, I see it not as unidirectional, I suppose. And because in all instances we're really looking at constraints, then we also don't have to worry too much about getting the right parcellation of function or the right exact understanding of how neurons work and exactly what currents are doing what and when and where. We don't.

[00:22:14] Speaker B: Right.

[00:22:14] Speaker A: We just instead need to build a model that captures as much data within a single model as we can. And then we have some claim to say this pretty unified characterization of psychological and neural function.

[00:22:25] Speaker B: Yeah, I mean, as long as the psychological data, the behavioral data is reproduced at some level, you don't need to worry about what you call it. Of course.

[00:22:34] Speaker A: Right.

[00:22:35] Speaker B: The other side of this coin. So this is the first question.

I could have had a lot more questions for you, but this is Brad Imony and he's a previous guest on the show. All right, so here's his question about 30 seconds. Hey, Chris, this is Brad Imony. I got a question for you.

[00:22:51] Speaker A: So the neural engineering framework seems quite.

[00:22:54] Speaker B: Effective if you have a known function for a brain region that you'd like to translate into a neural algorithm.

[00:23:01] Speaker A: But I guess the question is how do you see it when looking at less understood brain regions?

[00:23:06] Speaker B: Are there any examples of, of this approach in inferring novel functions that may be testable for brain regions where kind of an explicit formal function is not well understood?

[00:23:19] Speaker A: Right, yeah. So Brad is asking specifically about the neural engineering framework, which is maybe just as a bit of context, is essentially a technique for taking a function of some kind and building a spiking neural model to implement that function. And we generally like people to specify the function as a dynamical system, meaning that you write down some differential equations and then off you go. And you can build spiking neural network models where you have different degrees of neural detail and so on. So it's very general. It doesn't tell you how the brain works. It's kind of just a hypothesis about how for some high level low dimensional dynamics, you might be able to build a spiking neural network conversion that approximates that. All right, so with that in mind, I think Brad's question definitely comes from the way that we first presented this work. So we being myself and Charlie Anderson, we take examples where we can write down the differential equations. Right. So if we want to write down a memory, we're like X dot equals zero, so nothing changes over time because we're keeping it in memory. And then we can use the methods and off you go. And I think that has caused there to be a bit of confusion about what the applicability of the methods are. So People often ask, do you have to have this closed form description of the function? And the simple answer is no. Right. So the neural engineering framework can learn. So you can do optimization methods on functions where you just have a bunch of data points. Excuse me, in the same way that you do for deep learning or any other kind of optimization method that people use for neural networks in more recent work. We do that a lot more than we did in the original book. And in some ways it's a little less theoretically clean, but the same relationships and techniques and everything apply regardless of whether you can write down a closed form version of your function or not. So that being said, I think another reason that we've emphasized the ability to specify the function and have that constrain your neural model is because it opens up that possibility for lots of other techniques. Like again, standard neural network learning, people often don't or can't. Right. Like, even if I could specify the function, I still have to generate a big data set and then do my sort of back propagation training, which can take a really long time. Whereas in the neural engineering framework we have methods for very quickly. So much faster than trying to train up a system, find a solution which will actually replicate the dynamics of the system that you're interested in. So I think of the NEF as a way of bringing together either a data driven approach for generating your model or as a sort of closed form write down the function approach and you can do both. But because the ladder is unique, that's one that we've often talked most about.

[00:25:54] Speaker B: So do you imagine being able to infer a novel or new function based on approaching it through the nef? I mean, just through building the various functions in. I mean, part of spaun is that you don't have to add a whole lot to these parts to implement a new task, for instance. Right?

[00:26:14] Speaker A: Right.

[00:26:15] Speaker B: There's like a slow build and you don't have to add that much. But have you had the experience of seeing a novel function arise? I'm rolling my eyes just using arise or emerge or whatever.

Have you seen that happen or thought about the possibility of finding a new function in that manner?

[00:26:33] Speaker A: Yeah. This is another question to which the answer is probably uninterestingly subtle. So every time we implement a function that we've specified with math, it doesn't actually run that function. Right. It's always some other function and it's approximating what we specified in math. But there's uninteresting versions of that where it's like, yes, I'm out by 0.2 for whatever. Over the range of interest. There are more interesting versions of that where we have done built the working memory actually is a really nice example. We built a working memory, we wrote down the differential equations, we implemented it in neurons, and we actually have really interesting normalization effects that are coming from the neurons, not from our methods of replicating the function. And those normalization effects show up in the behavioral data. And our model matches the behavioral data better because we implemented it in neurons. So if we just run. Actually, I think this example is in the book. If we just run the math, which of course we can simulate the math directly, and then we look at how the model performs, it doesn't do nearly as similarly as humans as when we implemented in neurons using the techniques and then test it with the same data that we test humans with. So that's an example where. I don't know if you want to call it a new function, but definitely the way the function was implemented became much more like what actually happens in biology, it seems, given the neural data and the biological data. So does that count as a discovery of a new function? I mean, maybe it depends. The word function is so generic, right?

[00:28:04] Speaker B: Well, yeah, that's true. Uninterestingly subtle. Is that how you characterize that?

[00:28:10] Speaker A: Well, so the first part was supposed to be uninterestingly subtle. This part is maybe a little bit more interestingly subtle, I think. And then the extreme version of that in my estimation is something like GPT3. I'll keep coming back to this example where I've just got gigabytes of language data and I train up a bunch of parameters and then I can go back and I can look at the model and say, hey, where's the grammar and where's the syntax and where's the semantics and what is the organization of a content concepts in the model? And on and on and on. And so like, again, you can do that kind of thing in the nef, but we tend to shy away from it because it's just a different, I don't know, methodology in lots of ways. But spaun has got lots of deep learning in it. I'm super happy to use those techniques. I think they're great. And then it comes down to questions of explainability or how well you understand something if you want to go and intervene in the system, how have you built it can matter and all that kind of stuff. So it's kind of a spectrum often.

[00:29:07] Speaker B: When I ask people. So I mostly have people from the biological sciences on the podcast, but sometimes there's. Well, basically anyone I have on the show is at least interested in both sides of AI and what I call neuroscience, which encompasses all the natural sciences having to do with intelligence. Everyone seems to make. Well, I've heard it over and over, this distinction between the goals of AI and the goals of neuroscience. So the goal of neuroscience understand the brain and minds and natural intelligence, whereas the goal of AI is to build it. And that's more of an engineering problem. But I'd like to know how you see this. I mean, you gave the famous Richard Feynman quote earlier that you also gave in the book, but engineering can be used to understand something, which is what I take you to be doing with Spaunk. Do you see these as two very separate things? Engineering AI versus the goal of neuroscience and how you should go about doing it. Isn't engineering one method to understand something?

[00:30:09] Speaker A: Yeah, absolutely. I definitely think these are highly connected, and I think a lot of there is recent work in neuroscience which has borne this out. So there are a lot of neuroscientists, especially in the vision sciences, where we have a lot of information in the. Our understanding is getting more and more detailed. And the models we build are thus getting bigger and bigger. A lot of them are using deep learning now. Right. Let me build a deep learning recurrent convolutional neural network and train it up using the same data I train my monkeys with, and then see if the tuning curves are similar. And if I add constraints into my model, which are ones I observe in neocortex, does it do better on certain kinds of problems then you say you can find cases where it does. And our best models of the visual system are now deep learned neural network models. So I think that blurring, if you want to call it that, between techniques in neuroscience and techniques in AI, is going to continue 100%. And it's definitely happening in our hands. We've recently come up with some networks which have gone in the other direction, where we were looking at how brains represent time and came up with a new understanding of that that matched really nicely onto these time cells that recorded from rodent hippocampus.

[00:31:15] Speaker B: These are the lmus, right?

[00:31:17] Speaker A: Exactly. Yeah. The Legendre memory unit.

[00:31:19] Speaker B: Cool. It's cool. That's really neat stuff. Yeah.

[00:31:22] Speaker A: And then we took it over to the AI side and we're like, look, it beats all of your time series techniques. That seems interesting, right? I mean, the convolutional neural networks are the same thing. They beat all of the machine vision up until they came out. And they're based exactly on looking at how the brain has organized the visual cortex. So, yeah, this kind of swap back and forth, I think, is going to continue if anything is going to happen more. And the answer of they just have different goals. I mean, goals are things that are had by people. Right. And so different people in those different disciplines tend to have goals of the kind that you specified. But it doesn't mean the methods aren't perfectly useful in either domain, and doesn't mean you can't use deep networks to understand the brain, and it doesn't mean you can't use brain understanding to do better in neural networks research as well.

[00:32:12] Speaker B: If I had to hazard a guess, and I will, my guess is that people interested in how natural intelligence works, in understanding natural intelligence are much more prone to accepting inspiration and ideas from the AI world than vice versa. Then AI is even very interested in how the brain might perform some similar operations. Do you agree with that? And if so, why are the AI folks less interested in brains than brain folks are interested in AI, let's say?

[00:32:48] Speaker A: Yeah, it's an interesting question. Honestly, I don't really hold that view. So I can think of examples of people in AI who absolutely think neuroscience is a waste of time. Michael I. Jordan is a perfect example. I had a long conversation with him about this. But there are people of equal or greater stature who think that neuroscience is fantastic and really like it, you know, so Yahshua Bengio cares a lot about human psychological development. And Geoff Hinton has always connected his work to neuroscience. He hasn't put a lot of weight on it. He doesn't sort of necessarily think it's a critical hypothesis. But he definitely goes out of his way to say these are reasonable things and this might be a good interpretation of what's going on in the brain.

[00:33:29] Speaker B: But that's different than taking inspiration from actual brain mechanisms to really constrain your model. You can slap the biological plausibility model on anything or label on anything, really. I mean, some do that with more good faith than others. Right. And I'm not calling out the big AI names here.

[00:33:50] Speaker A: Yeah, well, yeah. So convolutional Neural networks, again, is one of my favorites. So Yana Kuhn, of course, did take inspiration from biology directly, I think. Yeah. So the second part of my answer to your question, where I was about to agree with you, which is that if you talk to people in AI, they don't spend a lot of time reading a ton of neuroscience and trying to extract every principle from neuroscience and put it in their model. That's more of a theoretical neuroscience kind of thing to do. And I think the reason there is because function will trump biological plausibility. So if I care more about something, if I can get it to work, then that's going to be more important to me. And by work, it has to do better than what the other guy did recently on whatever the benchmark is I care about.

And adding constraints is going to make that harder, not easier, typically. So it's not that common where I see a constraint or an inspiration, if you want to call it that, from neuroscience, that including it makes my model better. But when it does happen, it can happen in spades. So it's really a question of being able to distill the relevant things you learn from neuroscience into something that is functionally useful. And that's the hard part.

[00:34:56] Speaker B: It goes back to goals, though, again, I guess, because the big goal in AI is AGI, whatever general means in AGI. Right. But what you're saying is, at least in the part that you agree with me, that an AI researcher just wants to get the damn thing to work, and it doesn't really matter how specialized it is or how it actually works. So there's really little reason to take inspiration from some organ. The only known organ that we know that has this general capability to slow yourself down essentially to build the whole thing or to build something bulky, so bulky that it does lots of other things besides this one function you want it to do.

[00:35:38] Speaker A: Yeah.

[00:35:38] Speaker B: Does that track with you?

[00:35:40] Speaker A: Well, so I wouldn't say there's little reason to do it. I would say that it's really hard to pick the right inspiration. Right. So I think at the end of your comment there, you were like, hitting the nail on the head where it's hard to know which of the constraints you could take from biology are the ones that matter for function. Right. And that's really what they care about. And there definitely are some, and sometimes they have been discovered. But there's a lot of other constraints which don't seem that important for function. We don't care about things that are dealing with blood vessels or ion channels, and biology cares a lot about those things. And so distinguishing the constraints is really the challenge. Right. And since it's so hard to do, you're not going to spend your time just like if you take them all, you know, 1% or less are going to be the ones that were worth your time. And that's just you got other things to do with your Time spikes are.

[00:36:25] Speaker B: Not worth anyone's time, are they?

That's what we call a segue. So I want to talk about Applied Brain Research and neuromorphics here before we get into spaun. Because you helped start Applied Brain Research, I don't actually know what your role is with the company now. So this is a company offshoot from research done in your lab, is that right?

[00:36:50] Speaker A: Yep. Yeah, I'm one of the founders.

[00:36:52] Speaker B: So are you a scientific advisor now?

[00:36:54] Speaker A: I work sort of more closely than that. I'm technically the co CEO of the company.

[00:37:00] Speaker B: Okay. Because things are still spinning out of your lab into the company as well, right?

[00:37:04] Speaker A: Yeah, that's right. Yeah.

[00:37:05] Speaker B: So you guys develop. Well, lots of things. I'll tell you what, this is a good time to pause and play. You question number two. This is from Steve Potter, who was the first person on the podcast.

[00:37:19] Speaker A: Cool. Hello, Chris, this is Steve Potter from Georgia Tech. I'm a huge fan of your approach to modeling the brain. I think it's the right way to go. My question is how soon do you.

[00:37:30] Speaker B: Think models like yours will be implemented.

[00:37:32] Speaker A: In neuromorphic spiking hardware to solve actual problems for the average person? For example, I've watched fuzzy logic fade into the background and camera auto focusing systems. I've seen backprop nets fade into the background of postal zip code recognition. And recently deep learning models have faded into the background in many ways as big companies use them for complex text and image understanding. Neuromorphic spiking hardware has been around since.

[00:38:03] Speaker B: The 90s, yet we still can't find it in any consumer devices.

[00:38:06] Speaker A: So I'm wondering when you think that will happen.

[00:38:09] Speaker B: I bet you have an answer to this.

[00:38:12] Speaker A: I don't know how precise my when answer will be. Yeah, I will comment that just because we had stuff in the 90s doesn't mean the time is up.

Large scale deep neural networks have been around a lot longer than that. Right. So yeah. So. But it is an excellent question. It's one that's hard to answer from the company perspective. Of course we would love it to be sooner rather than later. I think what we've learned on the company side is that launching new hardware actually commercially is definitely a huge challenge. Even large companies like IBM and Intel who have developed neuromorphic chips have not released them commercially, which is unfortunate and challenging. But I still think that the fundamental advantages of event based computing and spike based computing and neuromorphics will be realized and probably in the next couple of years. And then the question of how long that will take to get into some product. I mean, hopefully it's sooner rather than later from the perspective of all these companies that are doing this kind of thing.

But I would be surprised if in the next five years there isn't some application area which is dominated by a neuromorphic approach.

[00:39:17] Speaker B: My understanding was that you guys are building neuromorphic chips and if that's right, I'm wondering if that is the dominant thing that the company is doing.

[00:39:26] Speaker A: So we are not building neuromorphic chips. Right. So we are working with companies that build neuromorphic chips. So there's companies like Gray Matter and there's a company that's going to start up based on the Spinnaker 2 project and Intel. You know, we built a bunch of software and demos and everything for the intel chip. So yeah, it's something that we have done, but we're not doing our own hardware and we are looking at finding financing now. But we're basically profitable and, you know, going on our merry way and perfectly content to keep doing what we're doing.

[00:39:54] Speaker B: I mean, one of the reasons why spiking is attractive is simply power consumption, that it's a lot lower power. And there's the classic notion that you could run our brain with as many watts, 20 watts as it takes to run a low powered light bulb. And yet the Googles and deep learning companies of the world are burning up our planet, consuming so much energy. And so implementing neuromorphic chips would reduce that energy by orders and orders of magnitude.

[00:40:23] Speaker A: Yeah.

[00:40:24] Speaker B: Is spiking itself?

Because I know you don't have to run on spiking. Is the advantage simply power consumption or are there computational advantages that we should really take to heart with spiking as well?

[00:40:38] Speaker A: I think the short answer is unfortunately the unglamorous one, which is that spiking is all about energy reduction, but it's also not unglamorous. It is absolutely. An overriding reason why a brain survives or not is if you can get enough energy to keep it going. It's also critical for environmental reasons, like you said. And if you could take your cell phone and put your laptop in it and it would run for years because you're using different kinds of hardware, you would care a lot.

It sort of sounds unglamorous to say, yeah, it's just about power, but you.

[00:41:12] Speaker B: Got to get excited about power some way.

[00:41:13] Speaker A: Everything is about power.

[00:41:15] Speaker B: That's like saying all of our beautiful cognitive functions are built on this surviving meat machine. And so you have to get excited about the survival aspect but that's not what's exciting to us. We want to talk about all of the higher cognitive functions, right?

[00:41:31] Speaker A: Yeah, you need both. Right. So we've actually done calculations like if we took spaun and we run it on GPUs now, and we know how many watts they take, and Spawn's really small. So we can just multiply the number of watts Spawn takes to get us to the size of a human brain. And if we do that, it's over a gigawatt. Right. So if we had, using current techniques and hardware, something which was the size of the brain, even if we figured out all the algorithmic challenges, which of course is a big hope, the amount of power we'd be using. This is like three power plants just for one model. Right. It's ridiculous. So it really is something that is absolutely a critical, critical constraint. And it's a big reason that we care a lot about low power in the company, I should say. The company is actually using a lot of low power techniques on commodity hardware. So we have realized that there's this challenge for getting commercially available neuromorphic chips out there, which are fundamentally spiking. But we also, because we're algorithmic focused, we can adapt those algorithms to current digital hardware and still beat set world records and the amount of power we're using to get a certain amount of accuracy or whatever the metric is you want.

So even though the sort of commercial future might be less certain on the hardware side, I think on the algorithmic side, you will probably see sort of neuromorphic in quotes, I guess, because at this point it's purely algorithmic techniques that make the next generation of AI a lot more power efficient.

[00:42:57] Speaker B: Is this your first foray into being a company man?

[00:43:02] Speaker A: It is, yep.

[00:43:04] Speaker B: That must be enlightening. And we could probably talk for hours just about that. I had Dalip George on the show who founded Vicarious and they build robot arms.

Well, I'm not going to say that like you guys do because they're very different.

And we'll get into the embodied cognition Spawn in just a moment. But he said in the past year or so he spent 80% of his time doing sales and product stuff. And I guess, like survival, like power consumption, you have to be excited. He's still excited about it because it's just another thing to optimize to him.

But part of his point was that in the company, it's not just like you make these beautiful research products and then move them into the company. You have customers and you have to actually Listen to what the customers need, specification wise and all that. That must be again, the power consumption efficiency must be the number one thing that you guys are hearing from customers.

[00:44:00] Speaker A: Actually, it's not the number. Well, yeah, it's part of the number one thing. So people want to be very careful about sacrificing accuracy for power. Right. So you don't want to be dropping your power and then have the model not perform that well. That's just kind of unacceptable. So you're always trying to optimize both of those things at the same time. I mean, I'm lucky insofar as being co CEO means that there's another co CEO who's on the business marketing and sales side. And so I definitely don't have that kind of challenge. So I am definitely more focused on building, helping them design the products and figuring out what the next steps are and using the technologies that are from the lab and integrating them and all that kind of stuff.

[00:44:43] Speaker B: So you're what, 90% lab? 10%. I mean, I know that they're so overlapping. 80, 20. 80, 20. Okay. The Pareto principle. You want to talk Spaun?

[00:44:52] Speaker A: Sure thing.

[00:44:53] Speaker B: This is the main thing that you probably talk about. It's in all your presentations. These beautiful animations, by the way. They're really fun.

So Spaun is the unified network of what's called a semantic pointer architecture that we'll get into in a minute. But eight years ago, 2012ish, when you published a paper in Science and then published a book on it, you had two and a half million neurons. Now it's at 6.6 million neurons with, like you said earlier, 20 billion what we'll call synapses, connections. And whereas before it did eight tasks, a measly eight tasks, now it does 12 tasks.

And I know that part of what you're doing and you have these great demo videos, you're adding an adaptation.

So we talked about how the different functions map onto different brain areas. And I'm not sure if you don't include a cerebellum yet in the animations, but maybe that's in the near future with this adaptation that you're adding in. And some of the other latest things that it can do is instruction following. And maybe you can talk about these things more as we go along is the simple. So. But now this is spawn 2.0, I suppose. Is that right in saying that?

[00:46:07] Speaker A: Yeah, that's what we call it.

[00:46:10] Speaker B: Was there a 1.2 or was it just.

[00:46:12] Speaker A: No. Okay, 1 and 2.

[00:46:13] Speaker B: I like that big Steps.

[00:46:14] Speaker A: Yeah.

[00:46:15] Speaker B: There's the simple way to say it, that the goal is to build a brain. I mean, just like the title of the book.

[00:46:19] Speaker A: Yeah, absolutely.

[00:46:20] Speaker B: How has that goal.

[00:46:21] Speaker A: It's my shortest answer.

[00:46:22] Speaker B: Yeah, I love that answer. Has that goal changed at all? Because, I mean, of course there are a billion sub goals with that overarching larger goal.

[00:46:30] Speaker A: Yeah, it really hasn't changed over time. Yeah, I mean, yeah, we want to build a brain. And the way we built the first one. Let me specify how it hasn't changed. So the way we built the first one is essentially had several students working on different projects, focused on functions of different brain parts, and then took all of those and integrated them together in one big model. And after doing that, of course I have more students and they have different projects. And when you write a PhD thesis, it has to be your own work. And so to some extent you want to have the disintegrated model, so you want people working on the different component parts right before you put it all together.

And so, yeah, so that happened. And then all of. Basically all the parts that were in spaun were improved and new ones were added and extended and so on. And then it was reintegrated, as it were, a second time. Right. Which is what, Spaun 2.0. And now we're back more in the phase of improving our understanding of all those parts as well as adding additional components. And that is really driven by the students. Right. Like the things they care about are the things that tend to get time spent on them. And if it's an overlap of something that's in spawn, it will result in an improvement. And if it's something that's not in spawn, it will result in a new feature or functionality or task, if you will.

[00:47:41] Speaker B: I have 100 questions just about just the development, the original development of the thing. I mean, so someone was working on a robot arm, and someone was working on an eye, a fixated eye, and someone's working on working memory. And then you brought these together, sort of.

[00:47:56] Speaker A: Yeah. I mean, really, someone was working on the motor system. So then a robot arm, like, it's not supposed to be a robot arm, it's supposed to be a biological arm, but whatever. Very similar principles. So somebody was working on motor control, Somebody else was working on vision and visual classification.

So that was why that uses deep learning methods, is because that seems like one of the better ways to generate those sorts of models, as lots of people have now come to do. And somebody else was working on working memory, so trying to Just build a model which replicates a lot of the different kinds of working memory experiments that you see both in humans and other animals.

And. Yeah, and off you go. And, you know, critically, a postdoc of mine, Terry Stewart, was very focused and remains focused on the basal ganglia as something which coordinates behavior across all of these different cortical areas. And of course, that's one of the really key pieces to being able to do the integration and build a model like spaun. So it's sort of bringing all of that together is what resulted in Spaun.

[00:48:51] Speaker B: 1, that control system. Do I have this right? That was at the heart of the general problem solver back in the early AI days. Is that right? Like with Newell?

[00:49:02] Speaker A: I don't think so.

[00:49:03] Speaker B: I thought they had a central control, like a production system.

[00:49:08] Speaker A: Yeah, so you can. Yeah, so they would never have called it a basal ganglia. They did have a production system, and Akdar also has a production system, and SOAR is a production system.

But the question is sort of how do you do the rule matching that they do in production systems? I don't even think of it as rule matching, to be honest. But yeah, if you have a controller, I guess then you could think of that as similar to what the basal ganglia is doing in spaun.

[00:49:33] Speaker B: Okay, so at the heart of spaun, the unified network, is the spa, the semantic pointer architecture. First of all, I'm just curious, was this the heart of all of the individual components before they began to be integrated? Has that always been there at the beginning, or is this something that you had to build in when you tried to unify everything?

[00:49:55] Speaker A: Yeah, it was more of the latter. So we had lots of work going on in the lab, and I had done lots of previous models of different component parts, and it might not be too surprising to hear that I did systems design engineering, which is a very kind of high level integrative engineering. And then I did philosophy, which is even sort of more high level and theoretical. And I got to wondering, how can we bring all of these low level bits and pieces together? There seems to be obviously a lot of coherence because we're using the same mathematical techniques to build all these models and so on. And so that's really what gave inspiration for trying to come up with a method of unifying all the different things that we had done, all the way from motor control and vision, to cognitive control art, intelligence, language processing, all these sort of things. And it was the attempt to tell a coherent story that resulted in the spa.

[00:50:43] Speaker B: Okay, so what Is the semantic pointer hypothesis in a nutshell.

[00:50:47] Speaker A: Right. So that's a good question. So I guess I should maybe say that the semantic pointer architecture itself is an architecture insofar as it's. Here's a set of component parts and some methods for integrating new parts. And one of the things that's important to those methods is semantic pointers. This is essentially a form of communication. And so the semantic pointer hypothesis basically says that high level functions in a biological system, so cognitive functions are made possible by processing semantic pointers. So they're basically a kind of representation. And in fact they're specifically neural representations that carry some semantic content and they can be put together into representational structures. So the structure being really important for capturing cognitive phenomena. And they're basically what underlies complex cognition. So then that's the hypothesis itself. So that semantic pointers are compressed representations that are used to build structured representations for complex cognition. And then a lot of the rest of the book can be seen as filling in the details. So what do you mean by structured representations? How do you construct those and why are they neural representations and what role do they play and how can you manipulate them and how do they get generated by a vision system or a motor control system?

[00:52:05] Speaker B: I mean, it's one way to. That I just kind of noted to myself, one way to view the brain as you have it with a semantic pointer architecture is like it's a big autoencoder, right? Where you go from like this, you lay it all out really nicely in the book. But super high dimensional, let's say visual input. And then you have these semantic pointers that compress that data, compress that information as they represent various parts of it and transform those representations into these really lower dimensional or higher abstract, you could say compressed entities. Then when you. But then when you need to move your arm for instance, then you have to what you, what you call dereference. That's very engineering, I imagine a very engineering computer science. As a computer science term. Damn it.

[00:52:56] Speaker A: Yeah.

[00:52:57] Speaker B: So you have to dereference, in other words, go from like this really low dimensional, highly abstract, compressed form and then back into the high dimensional space to enact some act. And it's not just perceptual motor. I mean these things happen from various cognitive processes as well. But in that sense it's like it squashes the function and then reproduces kind of like an autoencoder. Then it comes back to a larger high dimensional space. Maybe that's the only way. It's like an autoencoder yeah.

[00:53:26] Speaker A: So definitely the high, low, high thing definitely is like an autoencoder. I think the difference is that you're not auto. The auto part isn't working out. Yeah. Because you're not really reproducing the input. Instead you're mapping it to some other space. Like the motor space is just not the same as the visual space, but that's right. Yeah. I think it is important to have those concepts of going from higher dimensions to lower dimensions and manipulating things in the low dimensional spaces can be much more efficient than trying to manipulate stuff in the high dimensional space. And then as you were saying, sort of dereference back out potentially to another high dimensional space. Right. Which is what you might need for control.

[00:53:59] Speaker B: In the book you really detail and you know, obviously we're not going to get into all the details on how representations occur, you know, and how they're transformed. One of the key features of a semantic pointer and the semantic pointer architecture is that they are composable. Right. So you can combine the semantic pointers through mathematical operations and compose higher order functions. Maybe you could elaborate on that.

[00:54:25] Speaker A: Sure, yeah. So I think the key part here is that this is mostly focused on representations. So the representations are the things that you're constructing with your composition. And then we have processes for performing the compositions, unperforming the compositions, so doing things like dereferencing and so on, as well as manipulating those representations. So I think it's always helpful to distinguish between the representations and the processes acting on them, basically. And it's a little bit more difficult to say that the sort of processes are composable. They're sort of more like. I'm not sure what the right word would be, but they're organizable. Like you can sort of put them in different orders, so you can apply operations in different orders and things like that in order to get different effects. But yeah, that's right. So in for the cognitive semantic pointers, we identify some specific mathematical operators. In the book, we've actually come up with other operators than the ones in the book since then that have some nice properties and so on. And definitely in the book I focus on cognition and that's why I talk a lot about circular convolution. This idea that basically borrowed from Tony Plate who came up with circular convolution as a good way to generate structured vector spaces. So yeah, we've run with that, implemented it in neurons, extended it in various ways and so on. And it ends up being really powerful way of constructing things that look like sentences or things that look like lists or things that look like trees, all these kind of standard computer science types of data structures that seem really useful for getting our computers to do things and seem to map onto notions like grammar and so on in cognition and being able to represent those now in spiking neurons and manipulate them with the basal ganglia type of controller and all that kind of thing.

[00:56:05] Speaker B: You think of representations as being in a high dimensional vector space, as vectors in a vector space. That's sort of how you define representation, right? Which is a connectionist way, sort of.

[00:56:18] Speaker A: I'm not sure exactly how subtle you want me to be, but.

[00:56:20] Speaker B: Oh man, we like subtle. We can go down the rabbit hole a little bit on the show without dragging it out too much. But I like how none of your answers are yes or. You had that one yes answer.

[00:56:30] Speaker A: I had one.

[00:56:31] Speaker B: Yeah, that was good.

[00:56:31] Speaker A: Are we still trying to build a brain that's more than most?

[00:56:33] Speaker B: Yeah, so, yeah.

[00:56:36] Speaker A: So I guess the subtle answer is that I typically distinguish between a neural level representation and state representation.

And that's why I wasn't sure if I should say yes, please.

[00:56:45] Speaker B: Yeah.

[00:56:47] Speaker A: The vector space you were talking about is the state level representation, not necessarily what's going on in neurons. And that's right. Those tend to be low dimensional. Neural spaces tend to be very high dimensional, much higher even than whatever state space you're representing and working in. And this relates actually back to the earlier questions about starting with functions and relating them to spiking neurons. We can also start with vector spaces which are kind of like abstract standard vector spaces, Hilbert space or something. And then we can think about how to map that into a different vector space, which is a bunch of neurons that are spiking and can have whatever weird kinds of properties, but you can do a lot of thinking in the original vector space, the state space as I like to call it, and specify hypotheses there and think about what the right processes are to manipulate these representations. Think about transformations there, think about linearity, non linearity happening in that space.

And then conveniently, the NEF gives us these methods to map all of that into spiking neurons. And this much more complicated in some regards, space of neural activity.

[00:57:53] Speaker B: Okay, so we're going to pause here then because like I said, had Randy O'Reilly on the show and we talked about his cognitive architecture, Libra, and I told him that you were going to be coming on the show. And so he.

I'm going to play you a 3 1/2 minute clip here. With a question at the end to give context to what he said. And then we'll have one more question from him after and then we can use that as jumping off points to talk about whatever we need to.

[00:58:20] Speaker A: Sounds good.

[00:58:23] Speaker B: And I wanted you to kind of compare Libra and Spawn.

[00:58:26] Speaker C: Oh yeah, for sure. A lot of the. There's a lot of commonality and therefore, you know, a lot of opportunity to draw kind of key contrasts. And you know, Spaun, I think, you know, does have that advantage of being something where you have a lot of control over what the system does. And a lot of what they've done with the architecture is, is kind of configure, really building on work that Tony Plate did in the 90s, these holographic reduced representations to solve the binding problem, to do kind of, you know, fairly complex elaborate forms of cognitive information processing. And so I think they're able to get a lot of functionality out of the system because essentially they have a lot of control over what's happening. Whereas in Libra we're more at the mercy of like, what can we train the system to do and what kind of dynamics are going to emerge as we try to train these systems. And so we have a lot less control. And so I think we can't. You know, they have like models of doing like Raven's Progressive matrices and lots of complicated kinds of tasks. On the other hand, those models also provide then kind of perhaps less insight into how the brain really does it because it's kind of reflecting more of an information processing kind of prior assumption about how it could work. And one question I would ask about these models is how much have you learned in making that model relative to what you kind of came in with it? Like how much did the model teach you in making the model? Because I feel like once you have a framework where you can basically get the model to do what you want it to do, that's nice, but it also means that the model has less opportunity to teach you something that you didn't otherwise kind of know about the.

[01:00:20] Speaker B: Brain itself or about the functioning of.

[01:00:21] Speaker C: The brain, about the brain, about how that cognition actually might work in the brain. You know, I don't feel like the model of Raven's Progressive Matrices, for example, that they have gave me a lot of insight into how like the brain might actually solve that problem.

Likewise with other models that we've seen in AI, where they are now also taking on these Ravens progressive matrices tasks, which are kind of the. One of the classic tests of general intelligence. And that's why they're very attractive to model with these kind of AI models. But the whole thing about it is that it's a test of your on the fly ability to solve these kind of novel problems. And yet they're training these models or hand configuring these models kind of in a special purpose way to solve that task. And once you do that, it sort of negates the entire point of the, of the task, which is that it's supposed to be a transfer task, a novel task that you've never done before. And so it's like, yeah, I can get a system to solve that task. That's not the problem. The problem is how do I get a system that's never done something like that before to figure out how to solve the task on the fly on its own, you know, and that's the real problem. And again, I think that's where, you know, having kind of introspective access to, okay, well, gee, how am I going to solve this task? Well, I know there's these patterns, you know, just that whole process we would introspectively go through in thinking about how you would tackle a new task.

That kind of flexibility, I think is what, is what's missing.

[01:01:57] Speaker B: Okay, so he was kind of going on about his. Because we had talked about metacognition earlier and how he thinks that's important. So what say you, Chris Elias Smith, to this charge?

No, I'm just kidding. Sorry, that was kind of long. But I thought for context.

[01:02:13] Speaker A: No, no, not at all. It was actually good to get as much context as he provided the question because I've had many conversations with Randy and they're often on many different topics.

[01:02:21] Speaker B: Yeah, yeah.

[01:02:21] Speaker A: But yeah, I think he's continuing to somewhat misunderstand how the model works insofar as suggesting that whatever we want it to do, it will do, regardless of it being brain like or not brain like. I think, yeah. I'm struggling, I guess, to know if I should talk specifically about the Raven's Progressive Matrices or about Spaun more generally. Let me start with the latter. So, yeah, so he made the comment that spaun uses these circular convolutions that we were talking about before that Tony Plait suggested as a nice way of generating structured vector spaces and that a lot of the behavior of spaun is because of that operator. That's actually not true.

That circular convolution operator is a very small part of the model in sort of a computational respect. So it has nothing to do with the motor system or the Vision system or the basal ganglia. It's used in some of the models. So it's used in the working memory model for constructing lists and it's used in the. Yeah, the Ravens progressive matrix, like stuff that the model does. But yeah, there's so much more going on than that that, yeah, you wouldn't be able to build a model like spaun if that was the only operator or the only compression thing that you could do for sure.

So that's thing number one, maybe not that significant in a way. Like we do use it, we just don't. It's not like the whole model as it sort of sounded like in some regards.

And I think critically, I guess that the important thing that does follow from that is that I think Randy seems to believe that because we can construct representations using operators like that, that look like computer science representations, like as I was just discussing, which is I think a strength of that operator. It doesn't mean that we are writing down algorithms like computer science algorithms to manipulate symbol like structures in a way that most people in AI would if they were trying to solve Ravens progressive matrices. In fact, this is one of the big sort of emphases for us in presenting that model was to say, hey, look, if you. Just like Randy was saying, actually Raven's progressive matrices are supposed to be about coming to a problem that you haven't seen before and solving it not knowing what the right relationships are between all of these elements in the matrix.

[01:04:41] Speaker B: Right.

[01:04:41] Speaker A: You have to figure that out from square one. And our proposal about how that worked was unique precisely because it didn't build in the rules that you needed to solve the problem. So in essence, the thing that Randy was asking for is in our model, like that's one of the special properties of that model.

[01:04:58] Speaker B: So you think he's hung up on the approach just using computer science methods type approach just because of the functions?

[01:05:06] Speaker A: I think he's hung up on the fact that we call it the neural engineering framework and that engineers build things to have specific functions, typically regardless of low level constraints. But if you actually look at how biologically plausible spaun is compared to Libra, I think it's a fairly strong argument to say that spaun is far more biologically plausible.

We have everything in spiking neurons. We use all the time constants that you find in the real synaptic connections. In the majority of LIBRE models, they put in a max function which no neurons compute and they make assumptions about it happening instantaneously, which again destroys the dynamics of the model from Being realistic. So yeah, in some ways I think that kind of debate is not irrelevant from the biological realism perspective. But here he's more concerned with the high level techniques about what assumptions do you or don't you build into the model in order to get it to work. And in the case of our Raven's progressive matrices model, the model literally learns for each matrix how to do the inference like it is learning exactly like he's asking. The difference is it's not changing the weights and most of their models change weights. So the learning that happens in our Raven's progressive matrix model, as many others, happens in the activities, but in the brain, learning happens in the activities. That's what working memory is and so on as well. So yeah, I don't know. It's a bit of a criticism which I think misses the mark. In fact, it's kind of like one of the strengths of the model is precisely that it learns and infers these new patterns that it's never seen before.

[01:06:45] Speaker B: Part of what you were. I have one more question by him in just a second. Part of what you were saying about spaun being bidirectional, I think think is one of its greatest strengths as well. In that, I mean, it's not like you're not trying to produce psychological functions. But this goes back to the conversation earlier about levels of scale and descriptions at different levels. And you're at pains to remind the reader throughout the book and other works of yours that yeah, you do want psychological function. That's the behavioral output is what it's all about eventually. But you also want the implementation details to reflect how brains might be doing it. So yeah, all right, so I'm going to play one more question by him and then we'll move on.

[01:07:33] Speaker A: Sounds good.

[01:07:35] Speaker C: The other question to ask Chris is do they clearly explain that the spiking is added on after. Like you program the model using dynamic equations, continuous dynamic partial differential equations, and then they kind of add on the spiking afterwards. And when I learned that, it was kind of like. Because in his talks he makes a big deal about how it's a spiking network, but it's kind of first a not spiking network and then it becomes a spiking network kind of after the fact. And so to me that sort of was like, okay, well how much is really different between the spiking network and the original kind of differential equation version of the model? So anyway, you could ask him that.

[01:08:22] Speaker B: All right, shots fired. I like it.

So I should.

We had also been Talking. I'm sure you know this because you talk with Randy. He's been, I don't know if struggle is the right word, but he's been thinking about whether he needs to add spiking to his connectionist networks in Libra. And so we either talked about that before or after this comment. So you make a big deal out.

[01:08:49] Speaker A: Of a Yes, I do, because it's true. It's all spiking. I'm actually not quite sure what he means by add it after. I think also interestingly, we've already touched on this. You asked me a question earlier about do you ever learn anything? Do you discover new functions? And my answer was basically, yeah, you put it into spikes that you aren't actually computing the function you wrote down to start with. So being in spikes is important. And it's definitely not the case that you preserve your original hypothesis about what the dynamics might be once you write down the real or simulate the real spiking neural system. So the dynamical system you simulate is not the one that you wrote the equations down for and it's the one you simulate that matters. So it quote unquote really is spiking. We never simulate the non spiking system in the final spawn model. Right. It's really all spiking neurons. They're just neurons, they're just connected. Each neuron has its own differential equation. That's all we simulate. None of the high level stuff plays any role in the simulation at all. So I'm not quite sure. I imagine he's equating that high level state representation stuff we were talking about with the model, but ultimately it's not Right. So it really is. The thing that we simulate is the spiking neurons and the spiking. There's nothing, no adding on spikes after. I'm not quite sure what that means to be honest.

[01:10:11] Speaker B: Well, thank you Randy for those questions. But now I'll ask you, Chris and I already know that I'm going to get a ton of emails about having you guys on together at some point. So we'll see about that. But this is. In some ways the pre recorded questions are nicer because no one can be interrupted, for instance.

[01:10:29] Speaker A: Fair enough, that's right.

[01:10:31] Speaker B: But how do you think of spaun with respect to Libra? I mean, we've talked about this already a little bit, but I asked Randy about spaun and I'll ask you about Libra. I suppose.

[01:10:43] Speaker A: Yeah, I struggle with this question because Libra is not a model. Right. So Libra is a learning Method, kind of like a reinforcement learning technique. And so there is no Libra model. Expand is one specific model. You can download it and run it on your computer at home if you want. Right.

[01:10:59] Speaker B: Well, you can download LIBRA as well, and I haven't done that either. But it's not a model. Run simulations. You can simulate things though. Right. So maybe it depends on what you think of as a model.

[01:11:10] Speaker A: Yeah, it's not a model, it's a method. Right. So you would want to compare LIBRA to the nef, the neural engineering framework. That's a technique for constructing and simulating models. LIBRA is a technique for constructing and simulating models. They have their PDP or emergent now, I guess it's called. Right. And so emergent is like Mango. Libra is like the NEF and spaun. Like, there is no spawn that I know. Like, they built a lot of really cool big scale models using LIBRA and lots of other techniques, but I don't know which of those to compare Spawn to.

[01:11:40] Speaker B: Right. Yeah. So I know he has plans to embody libra, which that would be a step toward a more spawn. Like that would be a model then. Correct?

[01:11:50] Speaker A: Yeah. If he has a specific instance in which here's the simulation and we're using Libra to learn stuff in that particular simulation, I'm going to give that simulation a name. So whatever, Lisa, then that would be the thing that you would compare to spaun.

[01:12:04] Speaker B: Okay, gotcha. Well, okay, maybe you. And you don't have to say anything more about this if you don't want to, but maybe you can compare it to the neural engineering framework.